Goveren and control

Unified Governance

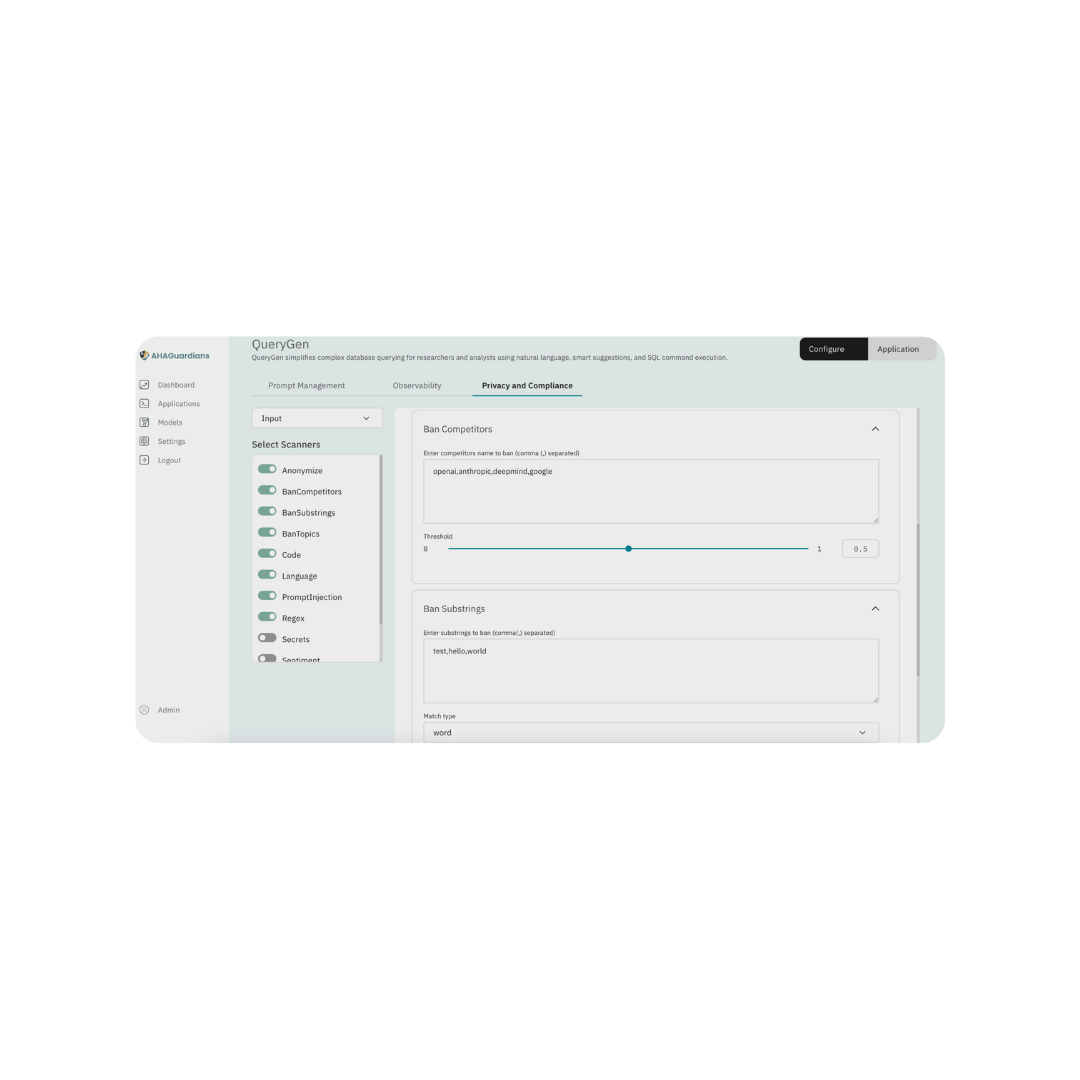

Prompt Safety Scanning

Scans user prompts for harmful content, bias, and sensitive data before processing.

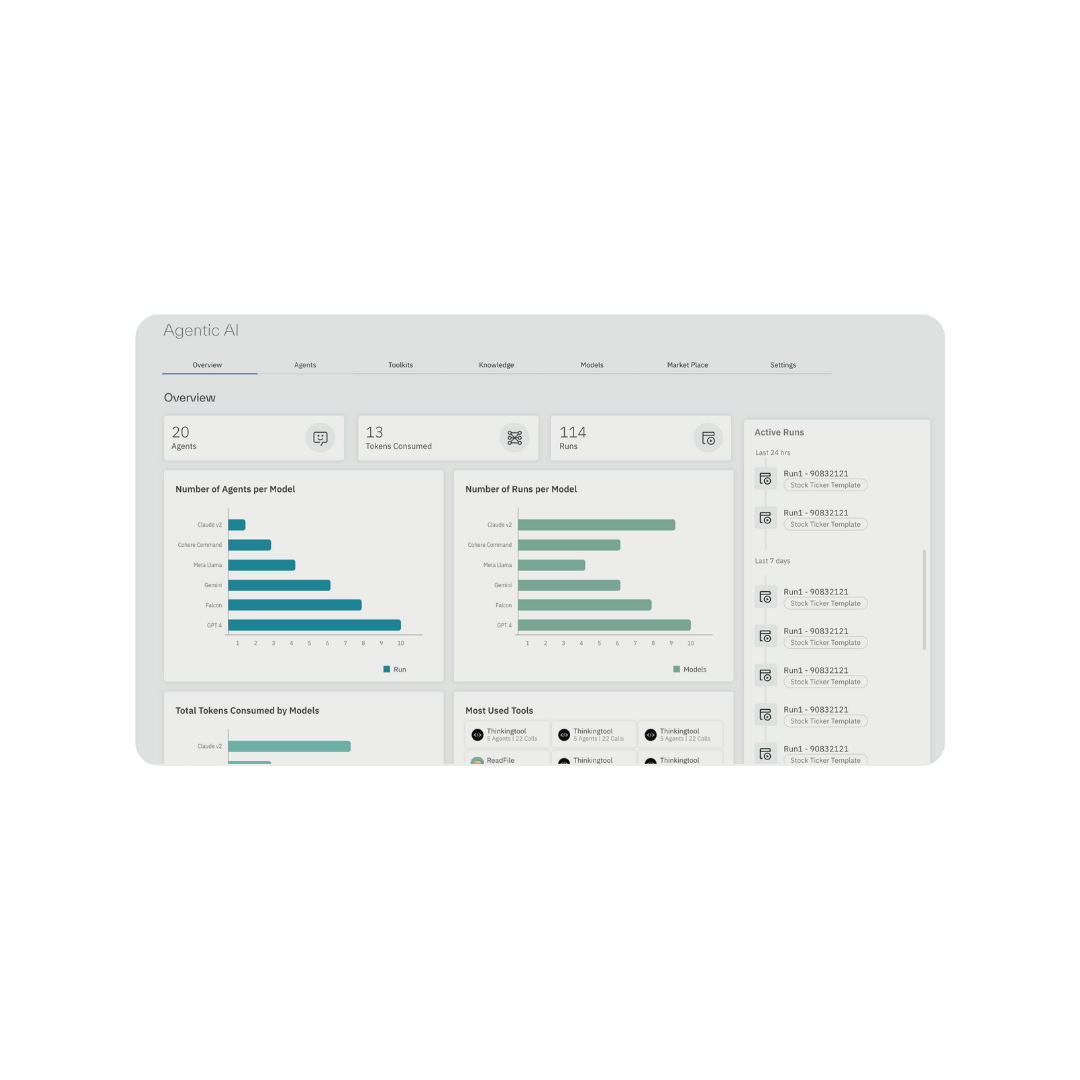

Agentic AI Auditing

Monitors and logs agent activities, decisions, and data access for compliance.

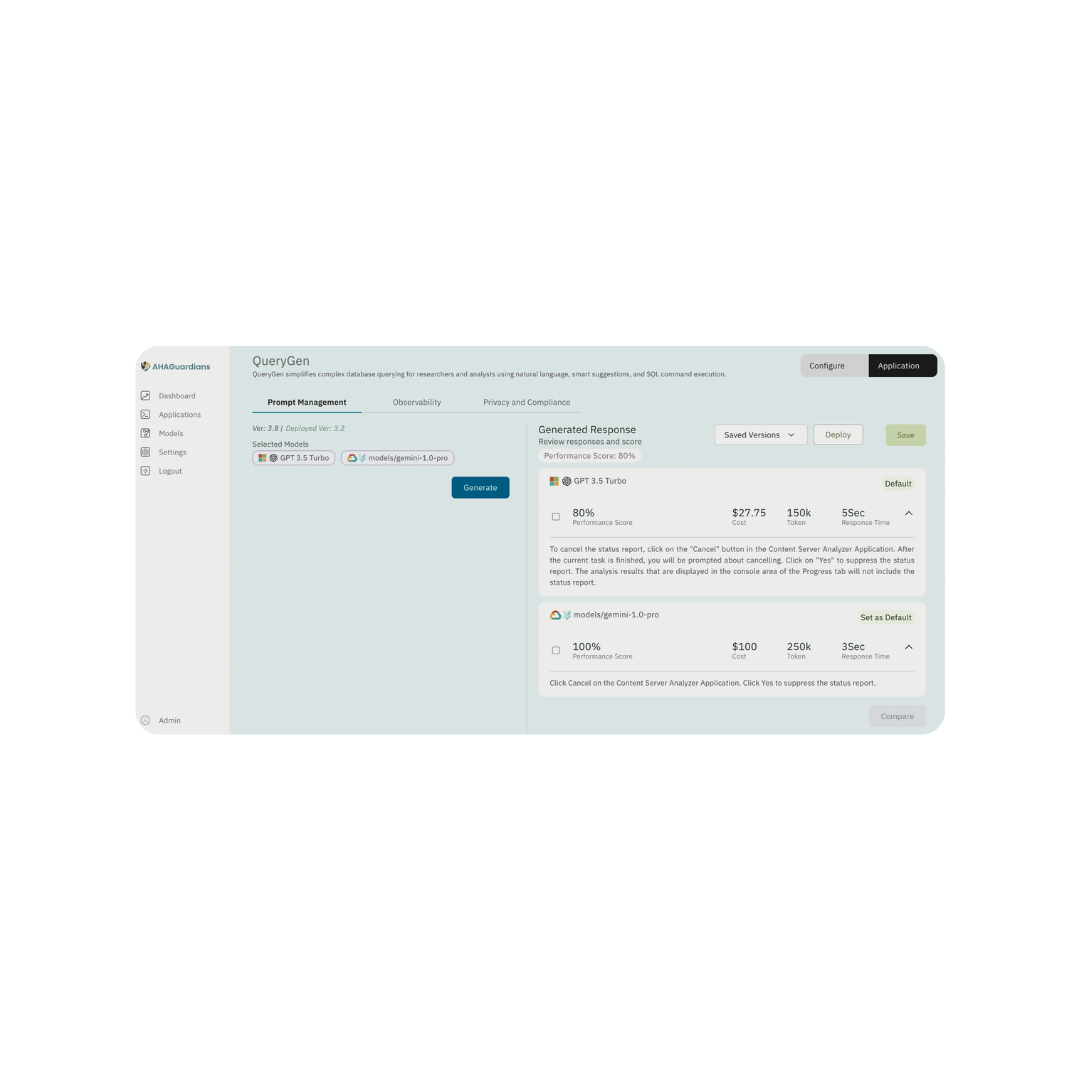

Generative Model Vetting

Evaluates generated content for quality, safety, accuracy, and ethical alignment.

Explainability Dashboard

Provides insights into AI reasoning, improving transparency and trust in model outputs.

Bias Detection & Mitigation

Identifies and mitigates biases in both model inputs and generated content.

Monitor and trace

Intelligent Observability

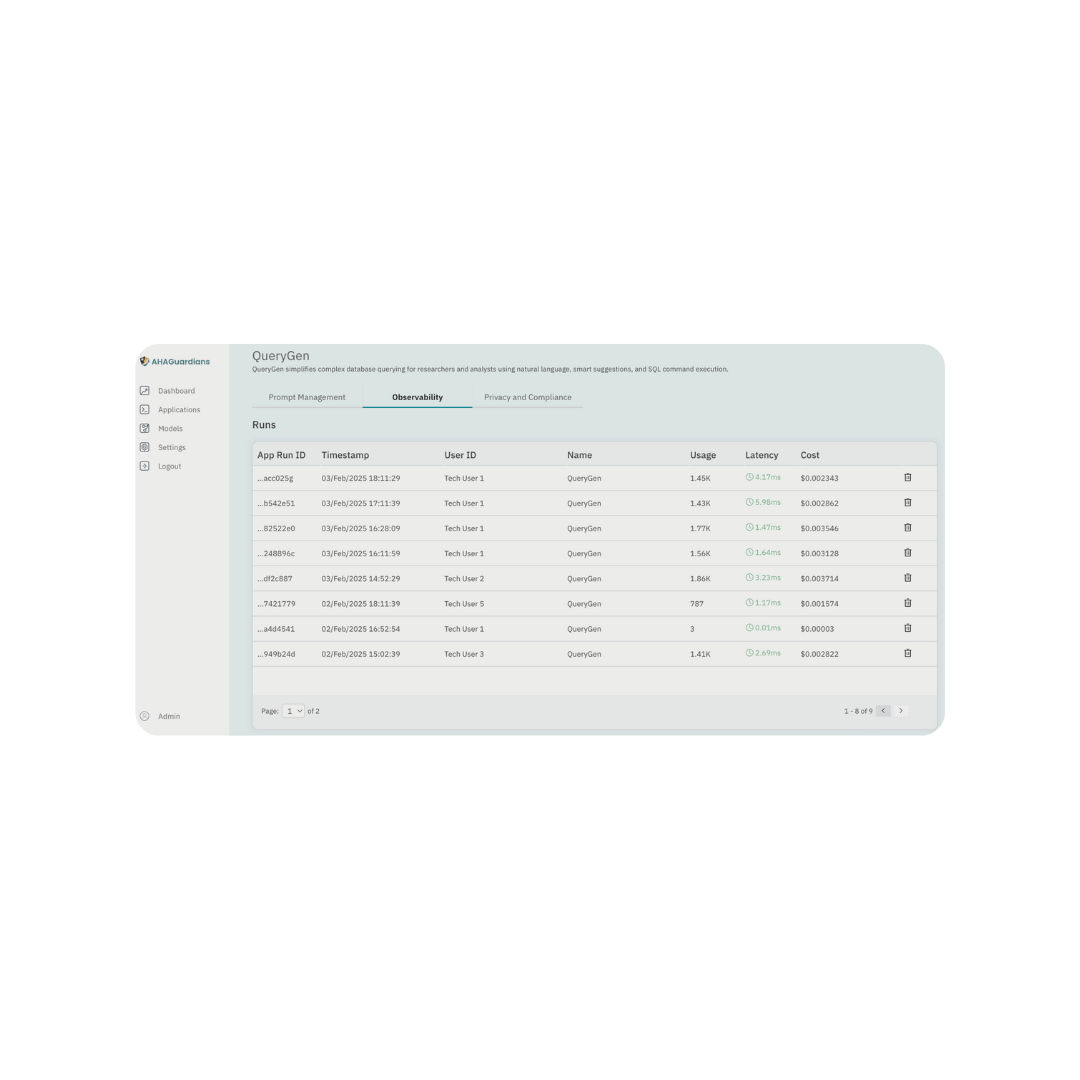

Prompt Provenance Tracking

Maintains a permanent record of all prompts for auditability and analysis.

Agent Activity Monitoring

Monitors agent actions and resource consumption for performance and security.

Model Output Traceability

Traces generated content back to its source prompts and model versions.

Generative AI Anomaly Detection

Detects anomalous model outputs and performance deviations in real-time.

Agentic AI Performance Profiling

Profiles agent execution paths and resource utilization for optimization.

Compliance and audit

Security and Privacy

Content Filtering

Filters generated content to prevent the output of harmful or inappropriate information.

Input/Output Risk Filtering

Scans inputs and outputs for potential security vulnerabilities and data leakage.

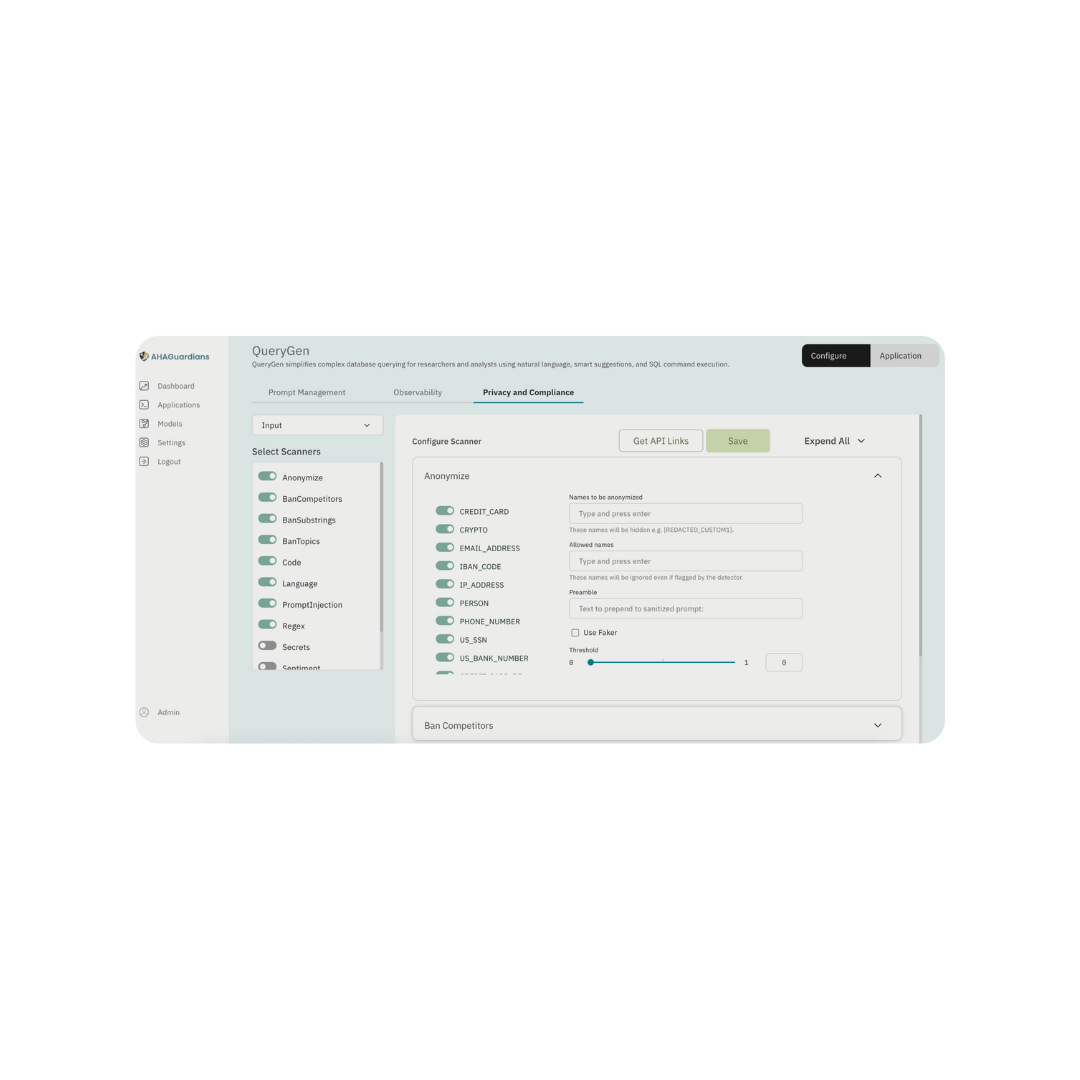

Data Anonymization

Anonymizes sensitive data used by AI models to protect user privacy.

Toxicity Detection

Identifies and flags toxic or offensive language in prompts and generated content.

Bias Detection & Mitigation

Detects and mitigates biases in model training data and generated outputs.

Subscribe Our Newsletter

Quick Link

Resources

- Privacy Policy

- Terms & Condition

- Documentation

- Help center